You are currently browsing the tag archive for the ‘Internet’ tag.

I am the youngest of three boys raised by a working single mom in the 1960s and1970s. We lived toward the poor end of the spectrum, so much of my childhood was spent devoid of television (back in those days, TV was considered a luxury, not a necessity). Due to these circumstances, I was well into my teen years before I encountered the idea that women could be anything other than strong, intelligent and capable. It still baffles me when I encounter people who start from an assumption that women are not strong, intelligent and capable. Especially because so many of them actually consider themselves to be feminists.

Needless to say, I am often misunderstood by people when the discussion turns to sexism and women’s issues (yes – I am allowed to discuss these things even though I have a penis). Usually it’s because I don’t assume women need protection. And because I assume they are relatively intelligent adult human beings, so when they do stupid things my initial response is other than “Oh, you poor thing!”. In fact, I have a universal response to stupidity that is colorblind and genderless. Those of you who know me have encountered it frequently.

So I generally try to avoid these discussions, especially on the Internet. When I look at a situation and say “Why in hell did she do something so dumb?” I immediately get attacked by a half-dozen or so ‘feminists’ who demand to know why I’m “blaming the victim” and/or being such a sexist. Which leaves me wondering why these ‘feminists’ think their role is to gallantly provide protection for someone they claim to consider a strong, intelligent, capable equal.

A large part of the problem is the simple fact that the Internet is a piss-poor vehicle for human interaction and communication. This is no fault of the Internet but is rather due to the fact that most humans are not very good at communicating. And extremely few of us are skilled at communicating using only the written word. This is why people who are good at it get paid for doing so.

So when we do try to discuss important issues on the Internet we usually screw it up. As far as I can tell, the overwhelming majority of the ‘discussions’ on the Internet about sexism consist solely of people pointing out instances of sexism and screaming “Look everyone! A bad thing!”. Just in case we didn’t already know that sexism is bad.

I, on the other hand, want to know why the strongest, most intelligent, most capable, most badass woman on the face of the planet still occasionally needs someone else to tell her she’s pretty. And I think maybe this is the kind of thing we should be talking about. The parts of the issue that are complicated and that maybe make us a little uncomfortable.

Which brings us to Go Set a Watchman.

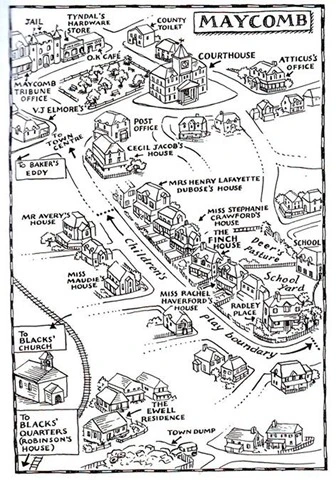

As should be obvious, the following will contain spoilers (although probably not anything you haven’t already heard). If you haven’t read Go Set A Watchman and intend to, you might want to stop reading at this point (I’m a Map Dork, so as a buffer I’ll throw in a map [found here]).

Still here? Good. I’ll get right to the point: Atticus Finch is a racist. I know this is not easy to accept, but it is, in fact, even evident in To Kill a Mockingbird (although not obvious. That is reserved for Go Set a Watchman). Before you get too upset, though, let me explain a couple things. First off, Atticus Finch is a racist, but only by today’s standards. By the standards of his own time (To Kill a Mockingbird takes place in the mid-1930s, when Atticus was in his early 50s. Go Set a Watchman takes place in the mid-1950s) he was something else entirely. Second, Atticus was what I think of as a ‘benevolent racist’. Unlike most of his contemporaries he didn’t consider black people to be subhuman (yes – I said black people. Political correctness is the process of white people sitting around deciding what the new labels should be. I don’t subscribe), nor did he in any way consider them to be undesirable or even unlikable. He just didn’t consider them to be equal. In To Kill a Mockingbird Jean Louise (a.k.a. Scout) states:

“Atticus says cheatin’ a colored man is ten times worse than cheatin’ a white man”

Later, Atticus himself says:

“There’s nothing more sickening to me than a low-grade white man who’ll take advantage of a Negro’s ignorance.”

Atticus’ racism is there, if you have eyes to see it. Go Set a Watchman just makes it more blatant and obvious. But it’s not any different. Atticus is not any different. His form of racism is a condescending one. He views black people very much as though they are children. Children who need our (read: white people’s) help.

Jean Louise, however, is not racist. She is described (by herself, in the interest of full disclosure) as ‘colorblind’. Despite growing up in Alabama in the 1930s and 1940s. How did this happen? Because she was raised by Atticus Finch. To Kill a Mockingbird and Go Set a Watchman are, in fact, two parts of one story. Jean Louise Finch’s story. The story of her relationship with her father. And how Scout, like every child, eventually comes to terms with her father’s humanity. How she finally realizes that Atticus Finch has as much right to be flawed as the rest of us.

Jean Louise eventually accepts the fact that her father is human and he therefore has faults. And she realizes that he is not defined by his faults. For his part, Atticus learns that he has succeeded in the task that all good parents set for themselves: he has raised a child who is better than he is (which, by the way, may not have happened if Atticus hadn’t actively defied the dictates of his family and community to allow his daughter to grow up to be exactly the person she desired to be). At the end of the day, though, Atticus lived in a time and place that was both extremely racist and extremely sexist, and he was years ahead of his time on both these issues. But not immune to them. And – truth be told – I’m okay with that.

I am finding, however, that many of the people I know are not. I am a little surprised and dismayed by how many of my friends are actively avoiding reading Go Set a Watchman (some of them have even concocted elaborate reasons for it). I wish I could say the reason for this is simply because they don’t want to face the fact that Atticus is a racist. The truth is that they don’t want to face what Atticus Finch’s racism represents.

We here in the Northeast live in a fuzzy pink bubble wherein we think we have largely beaten racism (we are wrong, and we are also not alone in this). Because of this, we believe that there are precisely two types of racists in this world: bad people and stupid people. We honestly believe that at least one of those two conditions must be in place before racism can even exist, let alone thrive. So the idea of an inherently decent and intelligent person (like Atticus Finch) who is also a racist is complicated and it makes us uncomfortable and we don’t want to look at it so we instead decide that it can’t exist.

Which only serves to prove that we are failing to understand the nature of racism.

See, racism is not rational. This is why it does not respond to reason. Nobody sits down, analyzes all the available evidence, then concludes that the only logical course of action is to be a racist. Racism arrives through a different vector, and for this reason we cannot combat it effectively with logic and reason. Also for this reason, otherwise decent and intelligent people can sometimes turn out to be racists. This invariably occurs during childhood. If you spend the bulk of your formative years surrounded by a certain way of thinking, there’s a decent chance you will come to believe that said certain way of thinking is normal and/or proper. Sexism often procreates by this method as well. As does religion.

Relax. Before you throw a hissy and accuse me of badmouthing religion, take a moment to look up the word ‘rational’. And know that most of the religions of the world will back me up on this. One does not reason one’s way to God. Religion is not logical nor does it desire to be. Belief is arrived at through other means.

This is why belief systems (good or bad) need to be kept in check via legislation. We cannot carefully explain the facts and then expect racists to become colorblind. We cannot throw logic at sexists and then expect them to suddenly support paycheck equality. We cannot reason with the religious right and then expect them to see the light in regard to marriage equality. It simply will not happen and thinking otherwise is just plain dumb. We need Affirmative Action. We need the Nineteenth Amendment. We need separation of church and state (make no mistake, folks – the Founding Drunkards were not concerned about freedom of religion. They were concerned about freedom from religion). Rationality cannot be applied to belief systems, so the only recourse a rational society has is to protect the general populace from them.

Note: This is the fourth and last part in a series on building your own home-brewed map server It is advisable to read the previous installments, found here, here and here.

Note: This is the fourth and last part in a series on building your own home-brewed map server It is advisable to read the previous installments, found here, here and here.

This is the point at which the tutorial-like flavor of this series breaks down, for a variety of reasons. From here on, we’ll be dealing with divergent variables that cannot be easily addressed. We’ll discuss them each as they come up. Suffice to say that from now on I can only detail the steps I have taken. Any steps you take will depend on your equipment and circumstances.

Having finished putting my server together, I decided it was time to give it a face to show the world. Before I could do so, however, I had to give it a more substantial connection to that world, a process that began with establishing a dedicated address (domain). The most common method of achieving this is to simply purchase one (i.e., http://www.myinternetaddress.com). There are a variety of web hosts you can turn to for this. I cannot personally recommend any of them (due only to personal ignorance).

For the purpose of this exercise I didn’t feel I needed a whole lot (all I really wanted was an address, since I intended to host everything myself), so I went to DynDNS and created a free account (thanks, Don). DynDNS set me up with a personal address, and the use of their updater makes it work for dynamic addresses (which most routers provide). The web site does a decent job of walking you through the process, including setting up port forwarding in your router.

Exactly how to go about forwarding a port is particular to the router in question, so I won’t go into it in detail. I will say that it is not something that should be approached lightly. Port forwarding can pose certain security risks. It’s a very good idea to do some research into the process before you dabble in it.

Once I had an address and a port through which to use it, I had to choose a front end for my server. I was tempted to go with Drupal, mainly because it has the best documented means with which to serve up TileStream, but also because I’ve been meaning to learn my way around Drupal for some time now.

In the end, I realized that my little server, despite being almost Thomas-like in its dedication and willingness to serve, just doesn’t have the cojones necessary for serving those kind of tiles. Truth is, if I wanted my own custom base map tiles in an enterprise environment, I’d purchase MapBox’s TileStream hosting rather that serving it myself, anyway. (Umm… I really couldn’t have been more wrong about this.)

And so I decided learning Drupal could wait for another day. Instead I chose to go with WordPress, for several reasons. I’m reasonably familiar with it, it’s a solid, well-constructed application, it’s extremely customizable, and it has an enormous, dedicated user base who have written huge amounts of themes and plugins. And while WordPress was originally intended to be a blogging platform (and remains one of the best), it’s easy enough to reconfigure it for other purposes.

Installing WordPress is a snap since we included LAMP (Linux Apache, MySQL and PHP) while initially installing Ubuntu Server Edition. In the terminal, type:

sudo apt-get install wordpress

Let it do its thing. When it asks if you want to continue, hit ‘y’. When it gets back to the prompt, type:

sudo ln -s /usr/share/wordpress /var/www/wordpress

sudo bash /usr/share/doc/wordpress/examples/setup-mysql -n wordpress mysite.com

But replace ‘mysite.com’ with the address you created at DynDNS.

Back to Webmin. On the sidebar menu, click on Servers→Apache Webserver→Virtual Server. Scroll down to the bottom. Leave the Address at ‘Any’. Specify the port you configured your router to forward (should be port 80, the default for HTTP). Set the Document Root by browsing to /var/www/wordpress. Specify the Server Name as the address you created at DynDNS (the full address – include http://). Stop and start Apache for good measure.

Now you should be able to point your browser to your DynDNS-created address (hereafter referred to as your address) to complete your configuration of WordPress. You will have to make many decisions. Choose wisely.

Once you have WordPress tweaked to your satisfaction, you’re probably going to want to add some web map functionality to it. First and easiest is Flex Viewer. All you have to do is move the ‘flexviewer’ folder from /var/www to /usr/share/wordpress. The file manager in Webmin can do this easily. Once you’re done, placing a Flex Viewer map on a page looks something like this:

<iframe style="border: none;" height="400" width="600" src="http://your address/flexviewer/index.html"></iframe>

Straightforward HTML. Nothing fancy, once all the machinery is in place.

Which gets a little trickier for GeoServer. By design, GeoServer only runs locally (localhost). In order to send GeoServer maps out to the universe at large, we have to do so through a proxy. This has to be configured in Apache. Luckily, Webmin makes it a relatively painless process.

We’ll start by enabling the proxy module in Apache. Click on Servers→Apache Webserver→Global Configuration→Configure Apache Modules. Click the checkboxes next to ‘proxy’ and ‘proxy_http’, then click on the ‘Enable Selected Modules’ button at the bottom. When you return to the Apache start page, click on ‘Apply Changes’ in the top right-hand corner.

Having done that, we can point everything in the right direction. Go to Servers→Apache Webserver→Virtual Server→Aliases and Redirects. Scroll to the bottom and fill in the boxes thus:

Your server will have a name other than maps. Most likely, it will be localhost. In any case, you can find it by looking in the location bar when you access the OpenGeo Suite. Apply the changes again, and you might as well stop Apache and restart it for good measure.

You can now configure and publish maps through GeoExplorer. The only caveat is that GeoExplorer will give you code that needs a minor change. It will use a local address (i.e., localhost:8080) that needs to be updated. Example:

<iframe style="border: none;" height="400" width="600" src="http://localhost:8080/geoexplorer/viewer#maps/1"></iframe>

changes to

<iframe style="border: none;" height="400" width="600" src="http://your address/geoexplorer/viewer#maps/1"></iframe>

And that – as they say – is that. Much of this has entailed individual choices and therefore leaves a lot of room for variation, but I think we’ve covered enough ground to get you up and running. If you want to see my end result, you can find it at:

The Monster Fun Home Map Server Webby Thing

I won’t make any promises as to how long I will keep it up and running, but it will be there for a short while, at least. Keep in mind that it is a work in progress. So be nice.

Update: My apologies to anyone who may give a crap. but I have pulled the plug on the Webby Thing. It was really just a showpiece, and I just couldn’t seem to find the time to maintain it properly. And frankly, I have better uses for the server. Sorry.

Note: This is the third part in a series on building your own home-brewed map server It is advisable to read the previous installments, found here and here.

Note: This is the third part in a series on building your own home-brewed map server It is advisable to read the previous installments, found here and here.

Last time, I walked you through installing TileMill, and I promised a similar treatment for TileStream and Flex Viewer. I am a man of my word, so here we go. Don’t worry – this will be easy in comparison to what we’ve already accomplished.

We’ll start with TileStream, simply because we’re going to have to avail ourselves of the command line. Once again, you can either plug a keyboard and monitor into your server or use whatever SSH client you’ve been using thus far.

Once you’re in the terminal, take control again (‘sudo su’). For your TileStream installation, you can follow the installation instructions as presented, except for one detail: it’s assumed we already have an application we don’t have. Let’s correct that:

sudo apt-get install git

And then proceed with the installation (don’t forget to hit ‘enter’ after each command):

sudo apt-get install curl build-essential libssl-dev libsqlite3-0 libsqlite3-dev

git clone –b master-ndistro git://github.com/mapbox/tilestream.git

cd tilestream

./ndistro

And that’s that (TileStream, even more than TileMill, will throw up errors during the installation. None of them should stop the process, though, so you can safely ignore them). Like TileMill, TileStream needs to be started before it can be accessed in a browser. Since the plan is to run the server headless, let’s set this up in Webmin in a fashion similar the one employed for TileMill.

Back to Webmin, again open the ‘Other’ menu, and this time click on ‘Custom Commands’. We’ll create a new Custom Command and configure it as follows (substitute your name for mine as appropriate):

Save it, and you will now have a Custom Command button to use for starting TileStream (we didn’t do this for TileMill because we cannot. The Webmin Custom Command function simply won’t accept it. I think it has to do with the nature of the command. I think the ‘./’ in the TileMill command confuses it).

At this point, TileStream is fully functional, but it doesn’t yet have a tileset to work with. Using the same browser with which you just accessed Webmin, go here to download one. Scroll down the page, pick a tileset you like and click on it to proceed to the download page (I picked World Light). Download the file to wherever you please. Once you have the file, go back to Webmin and open the ‘Other’ menu again. Click on ‘Upload and Download’, then select the ‘Upload to Server’ tab. Click on one of the buttons labeled ‘Choose File’, then browse to the tileset file you downloaded. For ‘File or directory to upload to’, click the button and browse your way to /home/terry/tilestream/tiles (by now, you should know you’re not ‘terry’). Click the ‘Upload’ button.

Once your tileset is finished uploading, you can point the browser to http://maps:8888 (yeah, yeah – not ‘maps’) to access TileStream. Enjoy:

Our last order of business is Flex Viewer (otherwise known as ‘ArcGIS Viewer for Flex’). This is the easiest of the lot, mainly because it doesn’t actually have to be installed. Still using the same browser, go to the download page (you’ll need an ESRI Global Account. If you don’t have one, create one), agree to the terms and download the current version (again – download it to wherever you please). Once you have the package, use Webmin to upload it to the server. This time you’ll want to upload the file to /var/www and you’ll want to check the ‘Yes’ button adjacent to ‘Extract archive or compressed files?’

And you’re in. Point the browser to http://maps/flexviewer/ (you know the drill) and play with your new toy:

You can see I have customized the flex viewer. You should do so as well (it’s designed for it, after all). Open the file manager in Webmin (the ‘Other’ menu again) and navigate to /var/www/flexviwer. Select config.xml, then click the ‘Edit’ button on the toolbar. The rest is up to you.

* * * * *

So now you have a headless Ubuntu map server up and running, and the question you are probably asking yourself is: “Do I really need all this stuff running in my server?” The answer is, of course, ‘no’. The point of this exercise was to learn a thing or two. If you’ve actually been following along and have these applications running in your own machine, you are now in a good position to poke around for a while to figure out what sort of server you’d like to run.

For instance, there’s no real reason to run TileMill on a server. TileMill doesn’t serve tiles, it fires them. Therefore it’s probably not the best idea to be eating up your server’s resources with TileMill (and it seriously devours resources). The server doesn’t have a use for the tiles until they’re done being fired, at which point TileStream is the tool for the job.

That said, there’s no compelling reason why you couldn’t run TileMill on your server. If you’d rather not commit another machine to the task (and if you’re not in any kind of hurry), why not give the job to the server? It’ll take it a while, but it will get the tiles fired (if your server is an older machine like mine, I would strongly advise you to fire your tiles in sections, then put them together later. I suggest firing them one zoom level at a time and combining them with SQLite Compare).

Flex Viewer and the OpenGeo Suite don’t often go together, but there’s no reason why they can’t. Flex Viewer can serve up layers delivered via WMS – there’s nothing to say GeoServer can’t provide that service. They are, however, very different applications, with vastly different capabilities, strengths and weaknesses. They also have a very different ‘feel’, and we should never discount the importance of aesthetics in the decision making process.

A final – and very important – consideration in the final configuration of our home server is the nature of the face it presents to the world. In order for a server to serve, it must connect to and communicate with the world at large. This means some kind of front end, the nature of which will influence at least some of our choices.

Which brings us neatly to the next post. See you there.

Note: This is the second part in a series on building your own home-brewed map server (I would tell you how many installments the series will entail, but I won’t pretend to have really thought this through. There will be at least one more. Probably two). It assumes you have read the previous installment. You have been warned.

Note: This is the second part in a series on building your own home-brewed map server (I would tell you how many installments the series will entail, but I won’t pretend to have really thought this through. There will be at least one more. Probably two). It assumes you have read the previous installment. You have been warned.

Last time, I walked you through setting up your very own headless map server using only Free and Open Source Software. Now, I’m going to show you how to trick it out with a few extra web mapping goodies. The installation process will be easiest if you re-attach a ‘head’ to your server (i.e., a monitor and keyboard), so go ahead and do that before we begin (alternately, if you’re using PuTTY to access your headless server, you can use it for this purpose).

At the end of my last post, I showed you all a screenshot of my server running TileMill, TileStream and Flex Viewer, and I made a semi-promise to write something up about it. So here we are.

I tend toward a masochistic approach to most undertakings in my life, and this one will not deviate from that course. Whenever I am faced with a series of tasks that need completion, I rank them in decreasing order of difficulty and unpleasantness, and I attack them in that order. In other words, I work from the most demanding to the least troublesome.

I originally intended to write a single post covering TileMill, TileStream and Flex Viewer, but a short way into this post I realized that I had to split it into two pieces. The next post will cover TileStream and Flex Viewer. This one will get you through TileMill.

TileMill can be a bear to install – not because you need catlike reflexes or forbidden knowledge or crazy computer skills – but simply because there are many steps, which translate into lots of room for error. A quick glance at TileMill’s installation instructions may seem a bit daunting (especially if you’re new to this kind of thing):

Install build requirements:

# Mapnik dependencies

sudo apt-get install -y g++ cpp \

libboost-filesystem1.42-dev \

libboost-iostreams1.42-dev libboost-program-options1.42-dev \

libboost-python1.42-dev libboost-regex1.42-dev \

libboost-system1.42-dev libboost-thread1.42-dev \

python-dev libxml2 libxml2-dev \

libfreetype6 libfreetype6-dev \

libjpeg62 libjpeg62-dev \

libltdl7 libltdl-dev \

libpng12-0 libpng12-dev \

libgeotiff-dev libtiff4 libtiff4-dev libtiffxx0c2 \

libcairo2 libcairo2-dev python-cairo python-cairo-dev \

libcairomm-1.0-1 libcairomm-1.0-dev \

ttf-unifont ttf-dejavu ttf-dejavu-core ttf-dejavu-extra \

subversion build-essential python-nose

# Mapnik plugin dependencies

sudo apt-get install libgdal1-dev python-gdal libgdal1-dev gdal-bin \

postgresql-8.4 postgresql-server-dev-8.4 postgresql-contrib-8.4 postgresql-8.4-postgis \

libsqlite3-0 libsqlite3-dev

# TileMill dependencies

sudo apt-get install libzip1 libzip-dev curl Install mapnik from source:

svn checkout -r 2638 http://svn.mapnik.org/trunk mapnik

cd mapnik python scons/scons.py configure INPUT_PLUGINS=shape,ogr,gdal

python scons/scons.py

sudo python scons/scons.py install

sudo ldconfig Download and unpack TileMill. Build & install:

cd tilemill ./ndistro It’s not as scary as it looks (the color-coding is my doing, to make it easy to differentiate things). The only circumstance that makes this particular process difficult is that the author of these instructions assumes we know a thing or two about Linux and the command line.

Let’s start at the top, with the first ‘paragraph’, which begins: # Mapnik dependencies. Translation: We will now proceed to install all the little tools, utilities, accessories and such-rot that Mapnik (a necessary and desirable program) needs to function (i.e., “dependencies”).

It is assumed that we know the entire ‘paragraph’ is one command and that the forward-slashes (/) are not actually carriage returns and shouldn’t be followed by spaces. It is also assumed that we will notice any errors that may occur during this process, know whether we need concern ourselves with them and (if so) be capable of correcting them.

Let’s see what we can do about this, shall we? Since we’re installing this on our server and actually typing in the commands (rather than copying and pasting the whole thing), we have the luxury of slicing it up into bite-sized pieces. This way the process becomes much less daunting, and it makes it easier for us to correct any errors that crop up along the way.

We’ll start by taking control. Type “sudo su” (sans quotation marks), then provide your password. Now we can proceed to install everything, choosing commands of a size we’re comfortable with. I found that doing it one line at a time works pretty smoothly. Two important points here: start every command with “sudo apt-get install” (not just the first line) and don’t include the forward-slashes (unless you’re installing more than one line at a time). I would therefore type in the first two lines like this (don’t forget to hit ‘enter’ at the end of each command):

sudo apt-get install –y g++ cpp

sudo apt-get install libboost-filesystem1.42-dev

You get the idea. Continue along in this fashion until you have installed all the necessary dependencies for Mapnik. I strongly recommend doing them all in one sitting. It just makes it easier to keep track of what has and hasn’t been installed.

At this stage of the game, any errors you encounter will most likely be spelling errors. Your computer will let you know when you mistype, usually through the expedient of informing you that it couldn’t find the package you requested. When this occurs, just double-check your spelling (hitting the ‘up’ cursor key at the command prompt will cause the computer to repeat your last command. You can then use the cursors to correct the error). At certain points in the installation process, your server will inform you of disk space consumption and ask you to confirm an install (in the form of yes/no). Hitting ‘y’ will keep the process moving along.

While packages install in your system, slews of code will fly by on your screen, far too fast to read or comprehend. Just watch it go by and feel your Geek Cred grow.

By now you should have developed enough Dorkish confidence to have a go at # Mapnik plugin dependencies and # TileMill dependencies. Have at it.

When you’re done, move on to installing Mapnik from source. Each line of this section is an individual command that should be followed by ‘enter’. The first line will throw up your first real error. Simply paying attention to your server and following the instructions it provides will fix the problem (in case you missed it, the error occurred because you haven’t installed Subversion, an application you attempted to use by typing the command ‘svn’. Easily fixed by typing sudo apt-get install subversion). You can then re-type the first line and proceed onward with the installation. When you get to the scons commands, you will learn a thing or two about patience. Wait it out. It will finish eventually.

Now we should be ready to do what we came here to do: install TileMill. Unfortunately, TileMill’s installation instructions aren’t very helpful at this point for a headless installation. All they tell us is to “Download and unpack TileMill”. There’s a button further up TileMill’s installation page for the purpose of the ‘download’ part of this, but it’s not very helpful for our situation. We could use Webmin to manage this, but what the hell – let your Geek Flag fly (later on, we’ll use Webmin to install Flex Viewer, so you’ll get a chance to see the process anyway).

Our installation of Mapnik left us within the Mapnik directory, so let’s start by returning to the home directory:

cd~

Then we can download TileMill:

wget https://github.com/mapbox/tilemill/zipball/0.1.4 –no-check-certificate

Now let’s check to confirm the name of the file we need to unpack:

dir

This command will return a list of everything in your current directory (in this case, the home directory). Amongst the files and folders listed, you should see ‘0.1.4’ (probably first). Let’s unpack it:

unzip 0.1.4

Now we have a workable TileMill folder we can use for installation, but the folder has an unwieldy name (which, inexplicably, the installation instructions fail to address). Check your directory again to find the name of the file you just unpacked (in my case, the folder was ‘mapbox-tilemill-4ba9aea’). Let’s change that to something more reasonable:

mv mapbox-tilemill-4ba9aea tilemill

At long last, we can follow the last of the instructions and finish the installation:

cd tilemill

./ndistro

Watch the code flash by. Enjoy the show. This package is still in beta, so it will probably throw up some errors during installation. None of them should be severe enough to interrupt the process, though. Feel free to ignore them.

Once the installation is complete, we’ll have to start TileMill before we can use it. This can be achieved by typing ‘./tilemill.js’in the terminal, but TileMill actually runs in a browser (and we’ll eventually need to be able to run it in a server with no head), so let’s simplify our lives and start it through Webmin.

Go to the other computer on your network through which you usually access your server (or just stay where you are, if you’ve been doing all this through PuTTY), open the browser and start Webmin. Open the ‘Others’ page and select ‘Command Shell’. In the box to the right of the ‘Execute Command’ button, type:

cd /home/terry/tilemill (substitute your own username for ‘terry’)

Click the ‘Execute Command’ button, then type in:

./tilemill.js

Click the button again (after you’ve gone through this process a couple of times, Webmin will remember these commands and you’ll be able to select them from a drop-down list of previous commands).

And now enjoy the fruits: type http://maps:8889 into the location bar of your browser (again, substitute the name of your server for ‘maps’). Gaze in awe and wonder at what you have wrought:

Take a short break and play around with the program a bit. You’ve earned it. When you’re done I’ll be waiting at the beginning of the next post.

Fellow Map Dork and good Twitter friend Don Meltz has been writing a series of blog posts about his trials and tribulations while setting up a homebrewed map server on an old Dell Inspiron (here and here). I strongly recommend giving them a read.

Fellow Map Dork and good Twitter friend Don Meltz has been writing a series of blog posts about his trials and tribulations while setting up a homebrewed map server on an old Dell Inspiron (here and here). I strongly recommend giving them a read.

At the outset, Don ran his GeoSandbox on Windows XP, but recently he switched over to Ubuntu. While I applaud this decision whole-heartedly, I thought I’d take the extra step and build my own map server on a headless Ubuntu Server box (when I say ‘headless’, I am talking about an eventual goal. To set this all up, the computer in question will initially need to have a monitor and keyboard plugged into it, as well as an internet connection. When the dust settles, all that need remain is the internet connection). The following is a quick walkthrough of the process. I apologize to any non-Map Dorks who may be reading this.

The process begins, of course, with the installation of Ubuntu 10.04 Server Edition. Download it, burn it to a disk, and install it on the machine you have chosen to be your server. Read the screens that come up during installation and make the decisions that are appropriate for your life. The only one of these I feel compelled to comment on is the software selection:

The above image shows my choices (what the hell – install everything, right?). Definitely install Samba shares. It allows Linux machines to talk to others. Also, be sure to install the OpenSSH server. You’ll need it. For our purposes, there’s no real reason to install a print server, and installing a mail server will cause the computer to ask you a slew of configuration questions you’re probably not prepared to answer. Give it a pass.

During the installation process, you will be asked to give your server a name. I named mine ‘maps’. So whenever I write ‘maps’, substitute the name you give your own machine.

Once your installation is complete, you will be asked to login to your new server (using the username and password you provided during installation), after which you will be presented with a blinking white underscore (_) on a black screen. This is a command prompt, and you need not fear it. I’ll walk you through the process of using it to give yourself a better interface with which to communicate with your server. Hang tight.

Let’s begin the process by taking control of the machine. Type in “sudo su” (sans quotation marks) and hit ‘enter’. The server will ask for your password, and after you supply it, you will be able to do pretty much anything you want. You are now what is sometimes called a superuser, or root. What it means is that you are now speaking to your computer in terms it cannot ignore. This circumstance should be treated with respect. At this stage, your server will erase itself if you tell it to (and it won’t ask you whether or not you’re sure about it – it’ll just go ahead and obey your orders). So double-check your typing before you hit ‘enter’.

Now, let’s get ourselves a GUI (Graphical User Interface). The server edition we’re using doesn’t have its own GUI, and for good reasons (both resource conservation and security). Instead, we can install Webmin, a software package that allows us to connect to our server using a web browser on another computer on the same network. We’ll do this using the command line. Type in (ignore the bullets before each command. They are only there to let you know where each new line begins):

And hit ‘enter’ (I’m not going to keep repeating this. Just assume that hitting ‘enter’ is something you should do after entering commands{the dark words}). Follow this with:

- sudo dpkg -i webmin-current.deb

And finish it up with:

- sudo apt-get -f install

Now we have a GUI in place. If you open a browser on another computer on your network and type: https://maps:10000 into the location bar (remember to replace ‘maps’ with the name you gave your own server), you’ll be asked to supply your username and password, then you’ll see this (you may also be asked to verify Webmin’s certificate, depending on your browser):

Cool, huh? Don’t get your hopes up, though. We’re not done with the command line yet (don’t sweat it – I’ll hold your hand along the way. Besides – you should learn to be comfortable with the command line). For the moment, though, let’s take a look around the Webmin interface. There is a lot this program can do, and if you can find the time and determination it would be a good idea to learn your way through it. For now, you just really need to know a few options. The first is that the initial page will notify you if any of your packages (Linux for ‘software’) have available updates. It’s a good idea to take care of them. If you want, Webmin can be told to do this automatically (on the update page you get to when you click through). The other important features are both located under the ‘Other’ menu (on the left). The first is the file manager (which bears a striking resemblance to the Windows File Manager of old), which gives you the ability to explore and modify the file system on your server (this feature runs on Java, so be sure the browser you’re using can handle it). The other feature is ‘Upload and Download’ which does what it says it does. Together, these two features give you the ability to put maps on your map server, something I assume you’ll want to do.

Please note the specs on my server (as pictured above). It’s not terribly different than Don’s Inspiron. I’m not suggesting you do the same, but it is worth noting that an old machine can handle this job.

Back to the command line. Let’s get OpenGeo:

- wget -qO- http://apt.opengeo.org/gpg.key | apt-key add –

- echo “deb http://apt.opengeo.org/ubuntu lucid main” >> /etc/apt/sources.list

- apt-get update

- apt-cache search opengeo

- apt-get install opengeo-suite

Rock and roll. When your server is done doing what it needs to do, go back to the browser you used for Webmin and type http://maps:8080/dashboard/ into the location bar. Check out the OpenGeo goodness.

Finally, to make your new server truly headless, you’re going to need some way to login remotely (when you turn the machine on, it won’t do a damn thing until you give it a username and password). Since you listened to me earlier and installed the OpenSSH server, you’ll be able to do this. All you need is an SSH client. If you’re remotely connecting through a Linux machine, chances are you already have one. In the terminal, just type:

- ssh <username>@<computer name or IP address>

In my case, this would be:

- ssh terry@maps

You’ll be asked for a password, and then you’re in (I hear this works the same in OS X, but I cannot confirm it).

If you’re using a Windows machine – or if you just prefer a GUI – you can use PuTTY. PuTTY is very simple to use (and it comes as an executable. I love programs that don’t mess with the registry). Tell it the name of the computer you want to connect to and it opens a console window asking for your username and password. Tell it what it wants to know.

It’s not a bad idea to install a new, dedicated browser for use with your new server. I used Safari, but only because I already use Firefox and Chrome for other purposes. Also, your network will probably give your server a dynamic IP address. This is not an issue for you, since your network can identify the machine by name. If you want to (and there are several valid reasons to do so), you can assign a static IP address to your server. To find out how to do so, just search around a bit at the extraordinary Ubuntu Forums. Update: It seem that Webmin provides an easy method to assign a static IP address to your server. Go to Networking → Network Configuration → Network Interfaces → Activated at Boot. Click on the name of your active connection, and you will then be able to assign a static IP address just by filling in boxes.

Enjoy your map server. If I can find the time, I’ll write up a post on how I added Flex Viewer, TileMill and TileStream to the server:

And 50 bonus points to anyone who understands the image at the top of this post.

When I attended Oxford about a decade ago, I took an amazingly interesting class called ‘British Perspectives of the American Revolution”. The woman who taught said class was fond of pointing out that the United States of America is really an experiment, and a young experiment at that. Whether we can call it a successful experiment will have to wait until it reaches maturity.

When I attended Oxford about a decade ago, I took an amazingly interesting class called ‘British Perspectives of the American Revolution”. The woman who taught said class was fond of pointing out that the United States of America is really an experiment, and a young experiment at that. Whether we can call it a successful experiment will have to wait until it reaches maturity.

I think of that statement often when the internet comes up in conversation. If the United States is a young experiment, the internet is in its infancy. For some reason, people today don’t seem to realize this. Even people who were well into adulthood before the internet went mainstream somehow manage to forget that there was life before modems. While this circumstance always makes me laugh, it becomes especially funny whenever a new Internet Apocalypse looms on the horizon.

Like this latest crap about Google/Verizon and net neutrality. I’m sure you’ve heard about it – the interwebs are all abuzz and atwitter about it (I’m sure they’re all afacebook about it as well, but I have no way to verify it). In a nutshell, it’s a proposal of a framework for net neutrality. It says that the net should be free and neutral, but with notable exceptions. You can read the proposal here. First off, don’t let the title of the piece scare you. Although the word ‘legislative’ is in the title, here in America we don’t yet let major corporations draft legislation (at least not openly).

Anyway, the release of this document has Chicken Little running around and screaming his fool head off. In all his guises. Just throw a digital stone and you’ll hit someone who’s whining about it. One moron even believes that this document will destroy the internet inside of five years. Why will this occur? Ostensibly, the very possibility of tiered internet service will cause the internet to implode. Or something like that.

Let’s put that one to rest right now. The internet isn’t going away any time soon. It won’t go away simply because it is a commodity that people are willing to pay for.

Allow me to repeat that, this time with fat letters: it is a commodity. The problem we’re running into here is the mistaken belief that a neutral net is some sort of constitutionally guaranteed human right. We’re not talking about freedom of expression here (except in a most tangential fashion). We’re talking about a service – a service that cannot be delivered to us for free. Truth is, net neutrality is an attempt to dictate to providers the particulars of what it is they provide.

A neutral net would be one in which no provider is allowed to base charges according to site visited or service used. Period. It’s not about good versus evil, it’s not about corporations versus the little guy, it’s not about us versus them. What it is about is who pays for what. Should I get better access than you because I pay more? Should Google’s service get priority bandwidth because they pay more?

Predictably, our initial response to these questions is to leap to our feet and shout ‘No!’ (and believe me, kids – I’m the first one on my feet).

But should we? Seriously – what other service or commodity do we buy that follows a model anything like net neutrality? Chances are, most of you get more channels on your TV than I do. Why? Because you pay for it. I probably get faster down- and upload speeds than many of you. Why? Because I pay for it. Many people today get data plans (read: internet) on their cell phones. Why? Because they pay for it.

Doesn’t this happen because the service provider dedicates more resources to the customers who receive more and/or better service?

And then there are the fears about the corporate end of the spectrum. As one pundit put it: What would stop Verizon from getting into bed with Hulu and then providing free and open access to Hulu while throttling access to Netflix?

The short answer is: Nothing would stop them. The long answer adds: Net neutrality wouldn’t stop them either. Does anyone really believe that net neutrality would stop Verizon from emulating Facebook by forcing customers to sign into their accounts and click through 47 screens before they could ‘enable’ Netflix streaming?

And I may be missing something here, but Verizon getting into bed with Hulu and throttling Netflix sounds like a standard business practice to me. I’m not saying I agree with it, just that it doesn’t strike me as being unusual. The university I attended was littered with Coke machines. Really. Coca-Cola was everywhere on that campus. Like death and taxes, it was around every corner and behind every door. But Pepsi was nowhere to be found. It simply was not possible to procure a Pepsi anywhere on the grounds of the university. Why was it this way? Simply because Coke ponied up more money than Pepsi did when push came to shove. Oddly, nobody ever insisted they had a right to purchase Pepsi.

Why – exactly – do so many of us think that the internet should be exempt from the free market?

Gather ‘round children, and let me tell you a story. It’s about a mythical time before there was television. In the midst of that dark age, a Neanderthal hero invented the device we now know as TV. In those early times, the cavemen ‘made’ television by broadcasting programs from large antennae built for the purpose. Other cavemen watched these programs on magical boxes that pulled the TV out of thin air. Because TV came magically out of thin air, it initially seemed to be free of cost. The cavemen who made the programs and ran the stations paid for it all through advertising.

Eventually, TV became valuable enough for everyone to desire it. This led to the invention of cable as a means to get programs to the people who lived too far away from the antennae to be able to get TV out of the air. Because putting cable up on poles and running wire to people’s houses costs money, the people at the ends of the wires were charged for the service.

It wasn’t long before the cable providers hit upon the idea of offering cable to people who didn’t need it, but might want it. To get more channels, or to get their existing channels at a better quality. Unsurprisingly, there was much yelling of “I will not pay for something I can get for free!”, but as you know it didn’t last long. In short order cable went from ‘luxury’ to ‘necessity’.

Does any of that sound familiar? Can you see a pattern beginning to emerge? Let me give you a hint: It’s about money. The internet has never been free. It just appeared to be so because someone else was largely footing the bill (or at least it seemed that way. Truth is, you’ve been paying for it all along, and the coin you’ve been paying with is personal data). The internet – like so much of our world – is market-driven. Don’t kid yourself into thinking otherwise.

And I hate to say it, folks, but it looks as though the market is moving away from net neutrality. The simple fact that it’s being talked about so much is a clear indication that its demise is imminent. To be honest, I’m not so sure this would be a bad thing. In the short term, a lack of net neutrality would pretty much suck. In the long term, though, it could very well be the best thing for us, the average consumers.

You see, while money drives the market, the market drives competition (as well as innovation). If our Verizon/Hulu scenario actually came to pass, it wouldn’t be long before another ISP appeared in town, one who wasn’t in bed with Hulu and was willing to offer Netflix (providing, of course, that there was a demand for such a thing). Eventually, we get to reap the benefits of price and/or service wars (much like cell service providers today). In fact, this could help solve one of America’s largest internet-related problems – the lack of adequate broadband providers (you’d be surprised how many Americans only have one available choice for broadband).

I don’t think we really need to fear losing net neutrality, even if it is legislated away. If enough of us truly want to have a neutral net, sooner or later someone will come along and offer to sell it to us.